1. First you need to check for available updates or latest version so you can have security patches too. Click System --> Packages --> Check For Updates, if you see latest verison available click Download & Install.

You can see below It's downloading

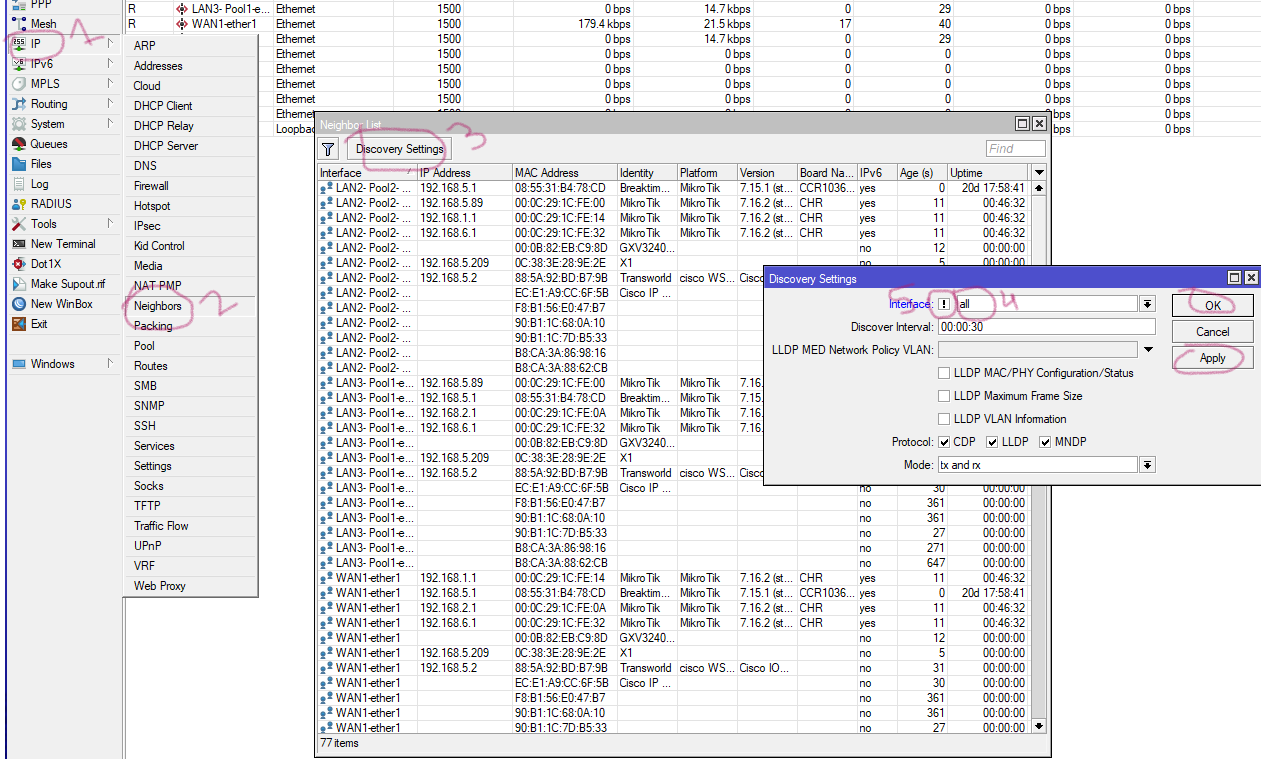

2. You need setup a different Username and password other than admin. Don't use command words in User/password always include capital/small words, special characters

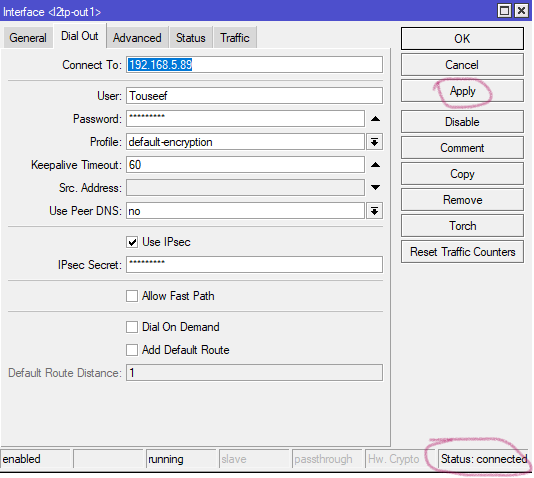

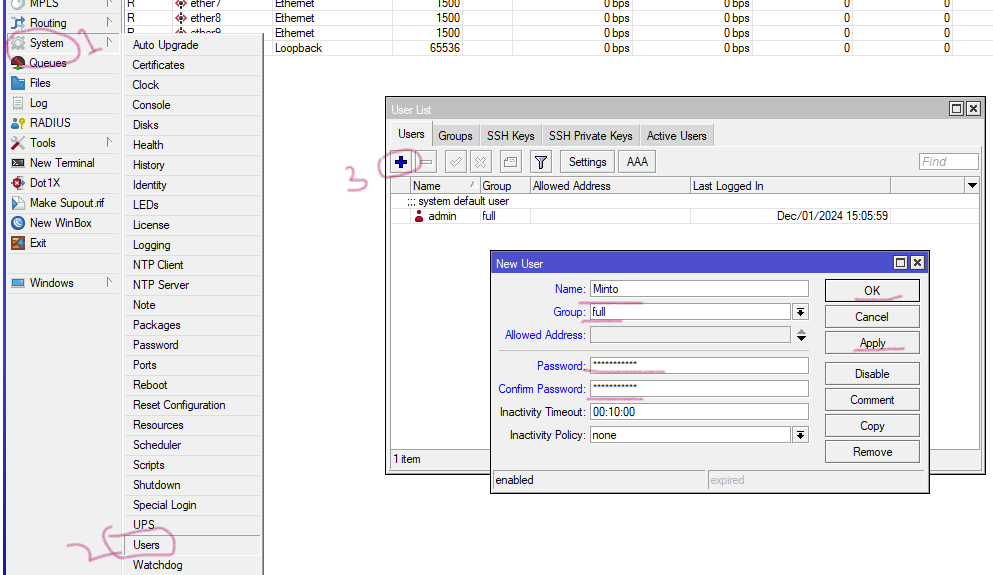

Click Systems --> Users --> Plus sign from window, then fill Name(Username ), Group(Full), Password. apply ok.

Logout/ login with new User and see if it works

Now, You can disable admin user.

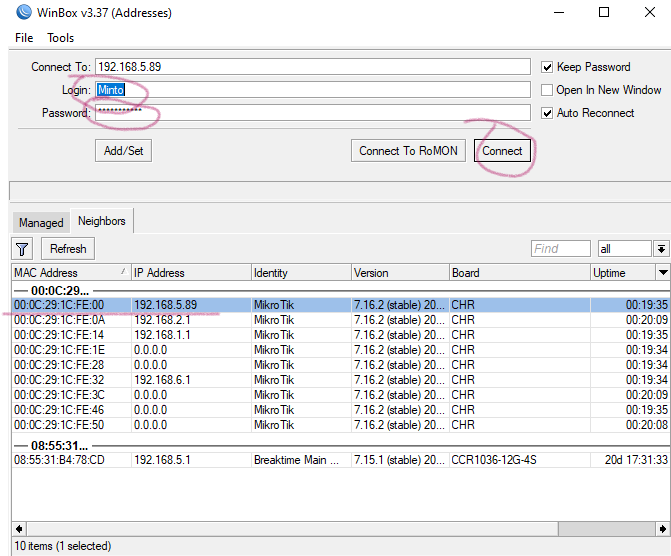

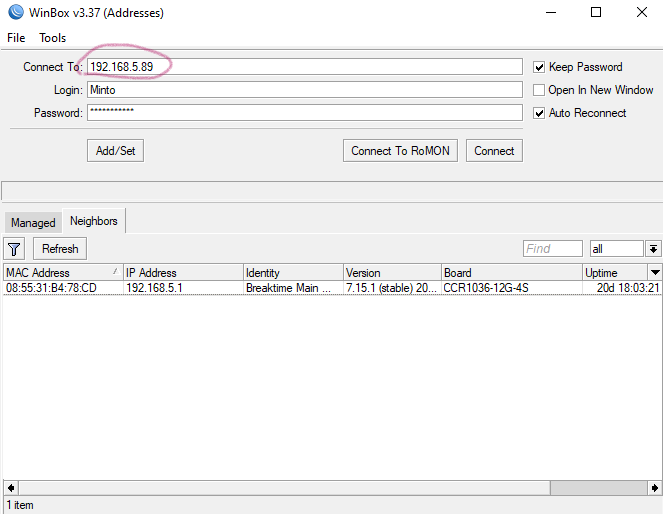

3. Third step to disable neighbor discover, like when you open winbox it shows all mikrotik it,s os veriosn ip address etc. Select IP--> Neighbors --> Discovery Settings, then select all from interface, chek mark small box. apply ok

You will see effect like below. 192.168.5.89 is not available in winbox

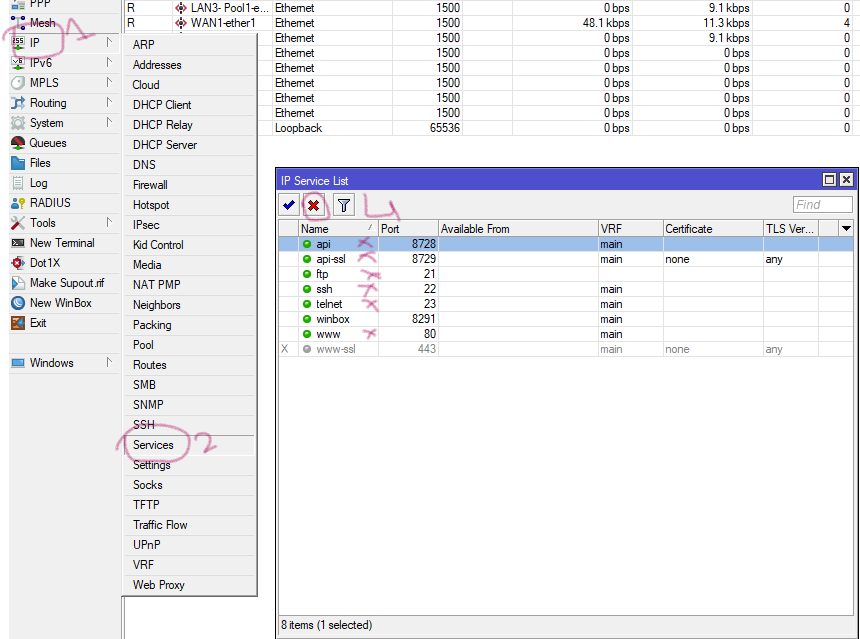

4. Step four disable unnecessary service. IP --> Services choose and disable, api provides mikrotik access on mobile, www provides access on web browser.

5. Fifth Step is to disable all unused interfaces. Click Interafce --> Ethernet, select interafce, rite click on it press disable.

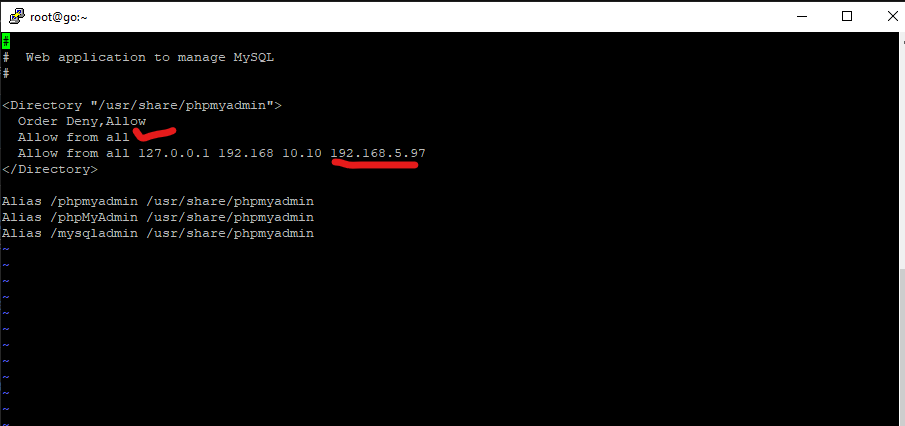

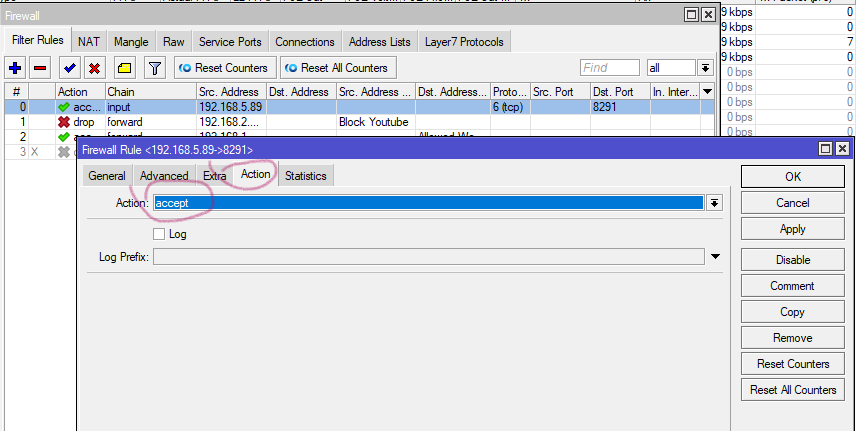

6. Allow Mikrotik access to specific IP address and deny all, it will block access weather a person know ID/password. You need to apply Filter Rules to Choose Chain (input) becasue of internal, Src addres (192.168.5.97) computer ip, Protocl (6tcp), Dst. Port(8291) winbox for mikrotik, Selection Action

Now Creat another rule to block rest all ip's in your LAN.

You need to place this rule after allowing rule becasue first match rule applies the policy.

If you want to record IP trying to login mikrotik then choose below under action

Now You are good to go with Mikrotik.